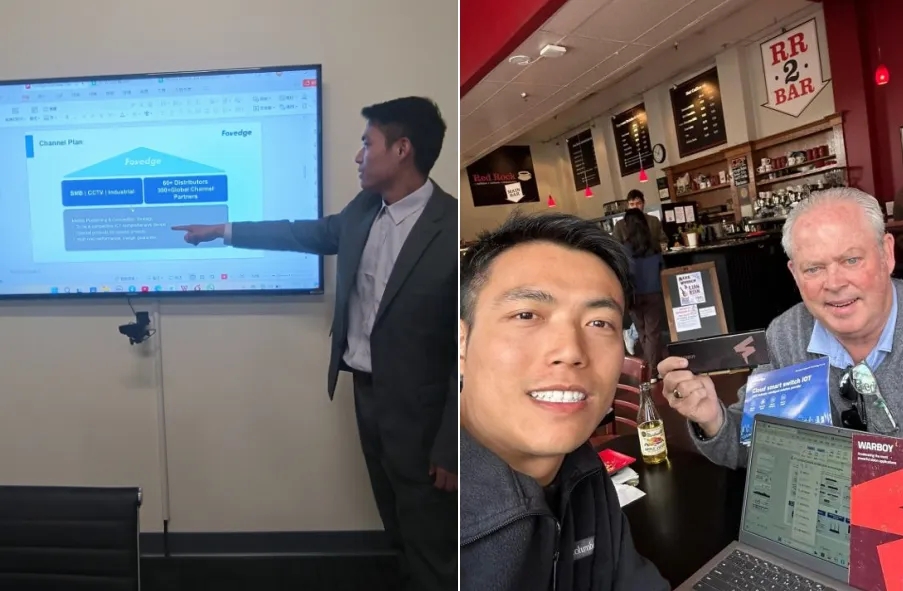

In April 2024, a team from the US branch of Shenzhen Fengrunda Technology Co., Ltd. visited integrator partners in Silicon Valley, California, USA. The purpose of this visit was to deepen strategic relationships with global partners, promote the development of advanced network technology, and provide competitive solutions for three network integration. In the wave of global enterprise digital transformation, Fengrunda Technology continues to promote its global channel expansion strategy and empower partners to accelerate the digital transformation process of enterprises in its market.

According to IDC data, in 2023, The AI network market has reached 2.5 billion US dollars, and investment in AI infrastructure construction is expected to reach 182 billion US dollars in 2024 and grow to 300 billion US dollars by 2026. Looking ahead to 2027, The revenue of AI networks will soar to over $10 billion, with Ethernet exceeding $6 billion. Meanwhile, The bandwidth growth of AI workloads will exceed 90% annually, and the development momentum is very strong.

Li Jianwen, Strategic Marketing Director of Fengrunda Technology’s US subsidiary, stated that “AI will become the most important growth driver in the Ethernet switch market in the next decade. With the continued popularity of AI, its workload is also growing exponentially, and network infrastructure is facing its limits.”. The construction of AI infrastructure needs to support large and complex workloads running on individual computing and storage nodes, which work together as logical clusters. The AI network connects these large workloads through a high-capacity interconnection structure, and the Fengrun S6700 series 100G data center core switch series products will usher in market development opportunities. Currently, they have received unanimous praise from a large number of integrator partners and end project customers, and will have great potential in the context of the AI network era

The team of Fengrunda Technology’s US branch introduced its rich product line to partners, covering from access layer to aggregation layer to core layer, from wired to wireless, from traditional switching to all optical networks. In addition, it also focuses on 100G core high-speed switches for data centers and 2.5G full series SONIC switch solutions for government and enterprise markets, showcasing Fengrun Technology’s profound technological accumulation and industry insights in various segmented industry markets. Our S6700 series 100G core high-speed switches have been successfully deployed to the laboratory rooms of American AI software company customers and have received positive feedback from numerous ISP customers. As a key component for upgrading data center networks, the market prospects of 100G switches have received widespread attention. With the rapid development of technologies such as cloud computing, big data, Internet of Things (IoT), and artificial intelligence (AI), the network infrastructure of data centers will face unprecedented challenges. The high bandwidth and low latency characteristics of 100G switches can provide users with a more reliable and secure network experience.

In addition to product and solution introductions, this Silicon Valley visit also includes in-depth communication sessions. The team and partners of Fengrunda Technology’s US subsidiary shared Fengrunda Technology’s strategic planning and layout in the North American market, as well as its experience in global market operations. They had in-depth discussions with partners on how to build and maintain a strong and efficient sales and service network. In addition, the US team also engaged in discussions with partners on future market development, exploring how to leverage Fengrun Da’s technological advantages to meet emerging business needs in a constantly changing market environment.

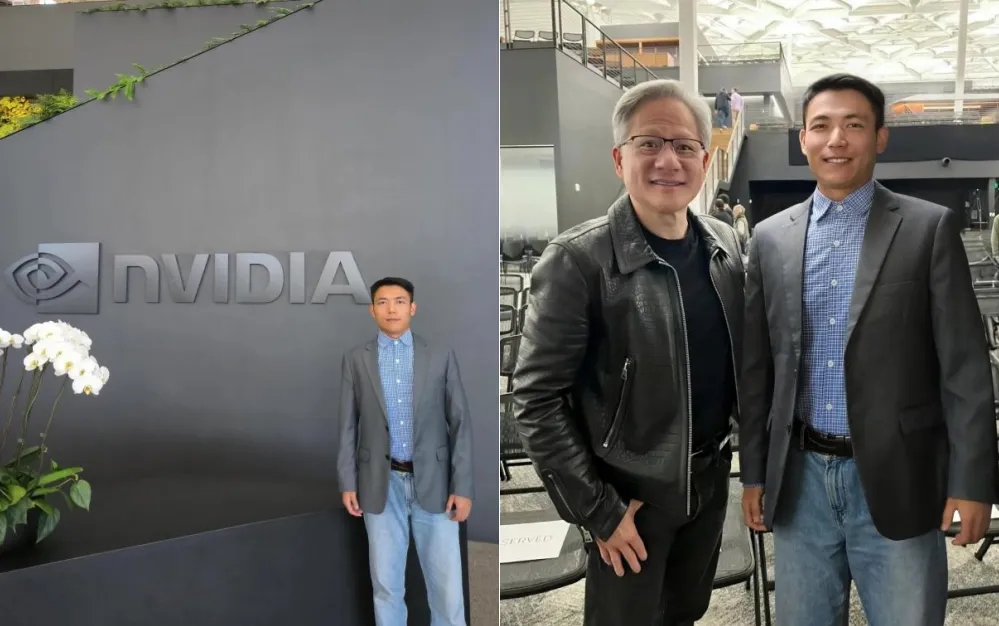

On the last day of the visit, the US team of Fengrunda Technology visited the Silicon Valley headquarters of Nvidia, a leading global AI chip company. The advent of large models and generative AI has ignited a computing power competition, which is the core foundation and fuel of the era of artificial intelligence. Our data center core switch products will face new opportunities in the context of high computing power consumption in the AI era.

The wave of the AI era has made Nvidia the focus of Silicon Valley. Nvidia founder Huang Renxun is a practitioner of long-term ism, who has been betting on CUDA for 20 years despite public opinion, ushering in the outbreak of the AI wave, The CUDA architecture has greatly promoted the generalization process of GPU technology, which has enabled Nvidia to stand out in the AI era. The highly integrated platform of “GPU hardware+CUDA ecosystem software” allows Nvidia to occupy an absolute monopoly share of over 90% in the current GPU market. Behind luck, there are often difficult choices and efforts that require strong strategic determination and perseverance. Any time Huang Renxun’s faith has been shaken in the past 20 years, it will not be today’s NVIDIA.

Through this visit to Silicon Valley, Fengrunda Technology not only consolidated its position in the US market, but also demonstrated its strength and vision in the field of high-speed network technology to the global market. In addition, this visit has deepened communication and cooperation with global channel partners, laying a solid foundation for the company’s long-term development strategy. The continuous innovation and keen insight into market trends of Fengrunda Technology will help the company occupy a more favorable position in the global market and provide more business opportunities and technical support for partners. With the continuous development and growth of the company, we look forward to Fengrunda Technology playing a more crucial role in the future network technology revolution.